Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "archiving"

-

it's funny, how doing something for ages but technically kinda the wrong way, makes you hate that thing with a fucking passion.

In my case I am talking about documentation.

At my study, it was required to write documentation for every project, which is actually quite logical. But, although I am find with some documentation/project and architecture design, they went to the fucking limit with this shit.

Just an example of what we had to write every time again (YES FOR EVERY MOTHERFUCKING PROJECT) and how many pages it would approximately cost (of custom content, yes we all had templates):

Phase 1 - Application design (before doing any programming at all):

- PvA (general plan for how to do the project, from who was participating to the way of reporting to your clients and so on - pages: 7-10.

- Functional design, well, the application design in an understandeable way. We were also required to design interfaces. (Yes, I am a backender, can only grasp the basics of GIMP and don't care about doing frontend) - pages: 20-30.

- Technical design (including DB scheme, class diagrams and so fucking on), it explains it mostly I think so - pages: 20-40.

Phase 2 - 'Writing' the application

- Well, writing the application of course.

- Test Plan (so yeah no actual fucking cases yet, just how you fucking plan to test it, what tools you need and so on. Needed? Yes. but not as redicilous as this) - pages: 7-10.

- Test cases: as many functions (read, every button click etc is a 'function') as you have - pages: one excel sheet, usually at least about 20 test cases.

Phase 3 - Application Implementation

- Implementation plan, describes what resources will be needed and so on (yes, I actually had to write down 'keyboard' a few times, like what the actual motherfucking fuck) - pages: 7-10.

- Acceptation test plan, (the plan and the actual tests so two files of which one is an excel/libreoffice calc file) - pages: 7-10.

- Implementation evalutation, well, an evaluation. Usually about 7-10 FUCKING pages long as well (!?!?!?!)

Phase 4 - Maintaining/managing of the application

- Management/maintainence document - well, every FUCKING rule. Usually 10-20 pages.

- SLA (Service Level Agreement) - 20-30 pages.

- Content Management Plan - explains itself, same as above so 20-30 pages (yes, what the fuck).

- Archiving Document, aka, how are you going to archive shit. - pages: 10-15.

I am still can't grasp why they were surprised that students lost all motivation after realizing they'd have to spend about 1-2 weeks BEFORE being allowed to write a single line of code!

Calculation (which takes the worst case scenario aka the most pages possible mostly) comes to about 230 pages. Keep in mind that some pages will be screenshots etc as well but a lot are full-text.

Yes, I understand that documentation is needed but in the way we had to do it, sorry but that's just not how you motivate students to work for their study!

Hell, students who wrote the entire project in one night which worked perfectly with even easter eggs and so on sometimes even got bad grades BECAUSE THEIR DOCUMENTATION WASN'T GOOD ENOUGH.

For comparison, at my last internship I had to write documentation for the REST API I was writing. Three pages, providing enough for the person who had to, to work with it! YES THREE PAGES FOR THE WHOLE MOTHERFUCKING PROJECT.

This is why I FUCKING HATE the word 'documentation'.36 -

This story starts over two years ago... I think I'm doomed to repeat myself till the end of time...

Feb 2014

[I'm thrust into the world of Microsoft Exchange and get to learn PowerShell]

Me: I've been looking at email growth and at this rate you're gonna run out of disk space by August 2014. You really must put in quotas and provide some form of single-instance archiving.

Management: When we upgrade to the next version we'll allocate more disk, just balance the databases so that they don't overload in the meantime.

[I write custom scripts to estimate mailbox size patterns and move mailboxes around to avoid uneven growth]

Nov 2014

Me: We really need to start migration to avoid storage issues. Will the new version have Quotas and have we sorted out our retention issues?

Management: We can't implement quotas, it's too political and the vendor we had is on the nose right now so we can't make a decision about archiving. You can start the migration now though, right?

Me: Of course.

May 2015

Me: At this rate, you're going to run out of space again by January 2016.

Management: That's alright, we should be on track to upgrade to the next version by November so that won't be an issue 'cos we'll just give it more disk then.

[As time passes, I improve the custom script I use to keep everything balanced]

Nov 2015

Me: We will run out of space around Christmas if nothing is done.

Management: How much space do you need?

Me: The question is not how much space... it's when do you want the existing storage to last?

Management: October 2016... we'll have the new build by July and start migration soon after.

Me: In that case, you need this many hundreds of TB

Storage: It's a stretch but yes, we can accommodate that.

[I don't trust their estimate so I tell them it will last till November with the added storage but it will actually last till February... I don't want to have this come up during Xmas again. Meanwhile my script is made even more self-sufficient and I'm proud of the balance I can achieve across databases.]

Oct 2016 (last week)

Me: I note there is no build and the migration is unlikely since it is already October. Please be advised that we will run out of space by February 2017.

Management: How much space do you need?

Me: Like last time, how long do you want it to last?

Management: We should have a build by July 2017... so, August 2017!

Me: OK, in that case we need hundreds more TB.

Storage: This is the last time. There's no more storage after August... you already take more than a PB.

Management: It's OK, the build will be here by July 2017 and we should have the political issues sorted.

Sigh... No doubt I'll be having this conversation again in July next year.

On the up-shot, I've decided to rewrite my script to make it even more efficient because I've learnt a lot since the script's inception over two years ago... it is soooo close to being fully automated and one of these days I will see the database growth graphs produce a single perfect line showing a balance in both size and growth. I live for that Nirvana.6 -

TFW your client's git policies are so draconian that the dev teams use "develop" as trunk, and completely ignore the release process.

I wrote up 50 pages of git standards, documentation and procedure for a client. Bad indian director 9000 decides the admin (also Indian) who specializes in Clearcase and has no git or development experience is more qualified to decide and let's him set the policy.

FF to today:

- documentation, mostly contradictory, is copy pasted from the atlassian wiki

- source tree is the standard

- no force pushing of any branches, including work branches

- no ff-merge

- no rebasing allowed

- no ssh, because he couldn't figure it out...errr it's "insecure"

- all repos have random abbreviated names that are unintelligible

- gitflow, but with pull requests and no trust

- only project managers can delete a branch

- long lived feature branches

- only projects managers can conduct code reviews

- hotfixes must be based off develop

- hotfixes must go in the normal release cycle

- releases involve creating a ticket to have an admin create a release branch from your branch, creating a second ticket to stage the PR, a third ticket to review the PR (because only admins can approve release PRs), and a fourth ticket to merge it in

- rollbacks require director signoff

- at the end of each project the repo must be handed to the admin on a burned CD for "archiving"

And so no one actually uses the official release process, and just does releases out of dev. If you're wondering if IBM sucks, the answer is more than you can possibly imagine.11 -

"Tar up your projects as version control."

- CS teacher

I understand git is hard (just the awkward syntax) and not part of a curriculum, but can it at least be suggested? A whole year later, I found out about git and it has made CS projects so much easier.

git commit -a -m "No more tape archiving"4 -

Me: ok, time to archive this shit and publish :D

Xcode: ok. Archiving started

10min later archiving done

Publishing to app store connect,

5 min later, sorry, we can't publish this cuz u haven't changed the build number from 2 to 3¯\ _(ツ)_/¯

Me:😠ok, whatevs, let's do this, archive

Xcode: ok, archiving started

10min later, archiving done!

Me: click next to upload to app store connect, 5 min later...

XCODE: SORRY, U CAN ONLY PUBLISH ON XCODE GM'S TO APPSTORE CONECT

Me:😤 OMG, OK 3rd time is the charm...

So now xcode has started archiving, I hope nothing happens again 😬6 -

Aaaaaaaargh!! Fing ashole!!

I got a major blocker reported, tried to connect to client, two of the user accounts were locked out because some genious used the last months password too many times.. FUUUU!! This happens almost every month!! FU! I go to the support dpt to check WTH is with those user accounts and got told the VPN is fucked up anyway so I will not be able to connect in any casr (disconnecting, bad transfer rate, it has a flue or prebirth cramps...whatever...). Ok, I ask if anyone notified our network admins and theirs.. And in response one guy mumbles something... I asked really really pissed off (due to the seriousnrs of the situation, we have max 8h to fix blockers and must check what is going on in minutes) if he is talking to me and answering my question or just talking to himself. He then a little bit more audiably said: we all are unable to work, you are not the only one with this problem & if you have a solutio... I already stormed out. Yes, everyone has problems connecting, no not everyone has a fucking blocker assigned to them!! Mayor malfunction on our system is not the same as archiving old processing data!!!

Simple yes or no question: did anyone notify our network admins & client's network admins?! And client's management that we have technical problems and cannot check the blocker situation immediately?! And I get a mumbling incompetents guy response... OmFG yes, I have a solution for you!! Go and jump of of the terrace!!4 -

I think I made someone angry, then sad, then depressed.

I usually shrink a VM before archiving them, to have a backup snapshot as a template. So Workflow: prepare, test, shrink, backup -> template, document.

Shrinking means... Resetting root user to /etc/skel, deleting history, deleting caches, deleting logs, zeroing out free HD space, shutdown.

Coworker wanted to do prep a VM for docker (stuff he's experienced with, not me) so we can mass rollout the template for migration after I converted his steps into ansible or the template.

I gave him SSH access, explained the usual stuff and explained in detail the shrinking part (which is a script that must be explicitly called and has a confirmation dialog).

Weeeeellll. Then I had a lil meeting, then the postman came, then someone called.

I had... Around 30 private messages afterwards...

- it took him ~ 15 minutes to figure out that the APT cache was removed, so searching won't work

- setting up APT lists by copy pasta is hard as root when sudo is missing....

- seems like he only uses aliases, as root is a default skel, there were no aliases he has in his "private home"

- Well... VIM was missing, as I hate VIM (personal preferences xD)... Which made him cry.

- He somehow achieved to get docker working as "it should" (read: working like he expects it, but that's not my beer).

While reading all this -sometimes very whiney- crap, I went to the fridge and got a beer.

The last part was golden.

He explicitly called the shrink script.

And guess what, after a reboot... History was gone.

And the last message said:

Why did the script delete the history? How should I write the documentation? I dunno what I did!

*sigh* I expected the worse, got the worse and a good laugh in the end.

Guess I'll be babysitting tomorrow someone who's clearly unable to think for himself and / or listen....

Yay... 4h plus phone calls. *cries internally*1 -

Xcode's taking an eternity to just archive a build. And what's worse is that the whole system turns sluggish, can't do anything else while archiving.4

-

Due to recent hiccups that devRant has, disappearing subs from users and app from stores, I wanted to divulge all that has accumulated in me to replace it. I want to see if y'all have some bright thoughts before this imaginary train makes it to the last station.

https://techhub.social/@vintprox/...devrant microblog musings migration mastodon backwards compatible multiapp community archiving devrant clone fediverse app idea2 -

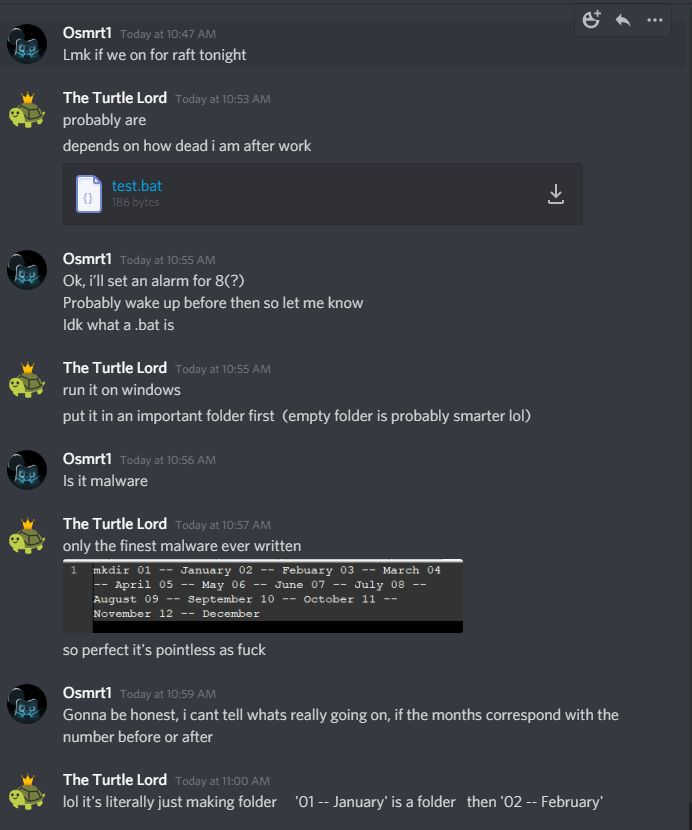

I wrote the most basic script today

it was either write the pointlessness (or find it online) or type each individual folder name manually like 12 separate times, and it'll slightly speed up archiving previous years work in a more organized fashion then my boss was doing on the shared drive before. there are probably better ways but ehh it works for me

also, I already fixed the typos, spelling was never my strong point lol 1

1 -

I really hate how stuff can disappear off the Internet, especially if it's behind a paywall. People aren't encouraged to download and archive stuff. I just wrote this script to archive things off of Locals:

https://gitlab.com/djsumdog/...

I really rushed to get it done because my subscriptions are expiring, and I really no longer want to support these people:

https://battlepenguin.com/politics/...3 -

I archive a lot of shit. I've been archiving YouToob videos for the past few years. Nearly 20% of my archived collection is no longer available on YouTube:

https://battlepenguin.com/tech/...

I wrote a tool if you want to check your collection too:

https://gitlab.com/djsumdog/youboot3 -

Sorry Office365 users... if you are experiencing 503's it's probably me.

Archiving my boss's inbox 50 messages at a time. So far archived over 10,000 emails only receiving a 503 that lasts about 5 minutes 5-6 times now. Still have ~30,000 more to go.. 1

1 -

What is the point of archiving posts on Reddit? I often find a post describing a problem which happens for me, but the problem was reported about 1 year ago and the post got archived, so I have no way to complain about it because archived post is read-only. So sad6

-

Docuware, oh Docuware.

Meant to be an archiving system, but the moment work flows were seen by our director the ball just went out of the court in terms of implementation.

We've gotten to a point where we don't want to use Asana for ticket tracking and task assignment, we don't want to use a tool that acts as a man in the middle to push information to dbs, we want to use workflows with set conditions to automate every single process in the company. Why? It's cheaper.

The syntax is alrightish for arithmetic expressions, but there are so many limitations that we've gotten to the point where we're absolutely circumventing the entire point of the software.

Initialise variables, Condition, condition, condition, draw data from external sheet, process based thereof.

"oh, why doesn't it display images on the populated forms? I don't want it just as an attachment I need to click next to see".

Frustration is paramount, but the light is at the end of the tunnel.

"Oh, did I mention that we need digital signitures?" you need an additional module Mr boss. "no, I bought the cloud bundle. Make it work".

Powerful tool, I'll give it that, but it's downfall is its lack of being comprehensive.

Month 3, here we go. 4

4 -

My new task: Improve performance of an archiving algorithm.

"We don't know what the cause of the performance issues is." I can tell you: Because it's overengineered bullshit! This is spaghetti inside spaghetti on top of spaghetti!

And it doesn't help that they don't want to know about different archiving algorithms, because I offered multiple alternatives, one 80% smaller than the other, but the other is 80% faster.

At least I'm instructed to throw it all away and rewrite it and not add even more to the garbage pile. I'm very happy about that.