Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "processor"

-

Boss: I need to demo our product but it looks smaller on my laptop.

Me: That is because you have a 1920x1080 monitor and your laptop is 1280x800

Boss: Is that something you can fix?

Me: No you will need a new laptop, but the company has a sales laptop with that resolution.

Boss: No just get the company credit card and buy me one today!

*Bosses son hears*

Bosses Son: Here take the sales laptop

Boss: Will that be quick enough

Bosses Son: It has a 8 core i7 Processor, 16GB ram and a dedicated GPU

Boss: *looks at me confused*

Me: Your demoing a web browser, that will be more then ok. But were using chrome so 16GB of ram will be pushing it.

*me and bosses son laughs*

Boss: Can we upgrade it?17 -

Whenever I come across some acronyms...

CD-ROM: Consumer Device, Rendered Obsolete in Months

PCMCIA: People Can’t Memorize Computer Industry Acronyms

ISDN: It Still Does Nothing

SCSI: System Can’t See It

MIPS: Meaningless Indication of Processor Speed

DOS: Defunct Operating System

WINDOWS: Will Install Needless Data On Whole System

OS/2: Obsolete Soon, Too

PnP: Plug and Pray

APPLE: Arrogance Produces Profit-Losing Entity

IBM: I Blame Microsoft

MICROSOFT: Most Intelligent Customers Realize Our Software Only Fools Teenagers

COBOL: Completely Obsolete Business Oriented Language

LISP: Lots of Insipid and Stupid Parentheses

MACINTOSH: Most Applications Crash; If Not, The Operating System Hangs10 -

We got a sever with two Intel Xeon E5520 processors and each processor has 8 cores and 2.27GHz.

Also the server has 36Gb of internal memory.

What do we do with it? We play Solitair 😎 19

19 -

Friend: "Why did you buy a Macbook Pro? Look at the specs, the RAM, the storage, the processor.. heck, ain't it overpriced? I wouldn't if I were you"

Me: "No, I didn't buy it. My company gave it to me when I joined them."

Friend: "Oh.. okay... hey, is there any job opening in your company?"13 -

Got my new workstation.

Isn't it a beauty?

Rocking a Pentium II 366 MHz processor.

6 GB HDD.

64 MB SDRAM.

1 minute of battery life.

Resolution up to SXGA (1280x1024)

Removable CD-Rom drive.

1 USB port (we like to use dongles, right?)

Also it has state of the art security:

- No webcam

- No Mic

- Removable WiFi

- I forgot the password

And best of all:

It as a nipple to play with!! 31

31 -

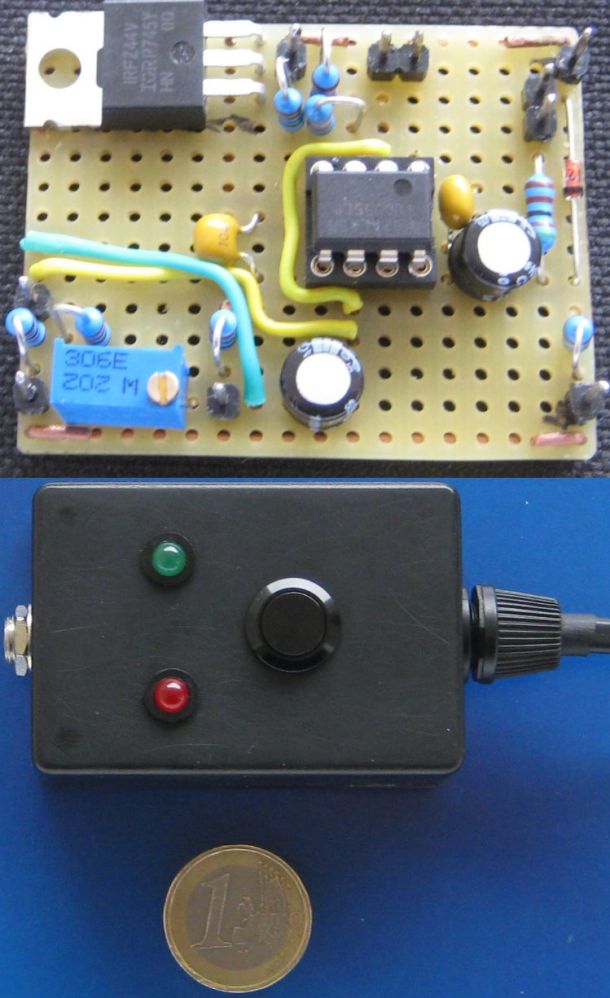

I want to stop charging my e-scooter at around 85% because this will increase the battery life. To avoid always having to pull the plug at the right level, I made a stop circuit that goes between charging brick and e-scooter.

There's no processor involved, just a CMOS 555 used as inverting Schmitt Trigger which controls a power mosfet. Also two status LEDs and a start switch. The poti adjusts the cut-off level. Worked on first try, with only manual voltage and tolerance calculations beforehand! 27

27 -

When I was in 7th grade, my neighbor (a DoD programmer) challenged me to write a sorting algorithm for a hypothetical super limited environment (he said a satellite). It didn’t have any built-in sorting methods, had very limited memory, slow processor, etc. so I needed to be clever about it.

It took me a few nights before i found a solution he liked. The method I came up with counted the number of occurrences of each number in the array and put them in the appropriate spots in a new array. This way it only required O(2n) running time and 2n memory.

I just learned today that this is called the “counting sort” 😄

I’m proud of little 11 year old me.20 -

"Arch Linux is actually not that difficult".

I ssh'ed into my home server yesterday.

I was greeted by a message from an ext3 disk about needing fsck. Fine, "I haven't been in here for a while, might as well do some maintenance". fsck /dev/sda6, let's go!

This nicely "repaired" the sshd service (i.e. cleared the sectors), I cursed at myself for pressing enter at "repair (y)" right before the connection broke.

So I connected a display and keyboard... ok so let's just pacman -Sy sshd or whatever. We can do this! Just check the wiki, shouldn't be that hard!

Wait... pacman has not run since 2010? WAIT IT'S ACTUAL UPTIME IS 9 YEARS??? I guess we know why I'm a DB admin and not devops...

Hmm all the mirrors give timeouts? Oh. The i686 processor architecture isn't even supported anymore...?

4 hours, 11 glasses of cognac, 73 Arch32 wiki/forum pages, 2 attempts at compiling glibc, and 4 kernel panics later: "I think I'll buy a new server".16 -

THIS is why unit testing is important, I often see newbs scour at the idea of debugging or testing:

My high school cs project, i made a 2d game in c++. A generic top down tank game. Being my FIRST project and knowing nothing about debugging or testing and just straight up kept at it for 3 months. Used everything c++ and OOP had to offer, thinking "It works now, sure will work later"

Fast forward evaluation day i had over 5k lines of code here, and not a day of testing; ALL the bugs thought to themselves- "YOU KNOW WHAT LETS GUT THIS KID "

Now I did see some minor infractions several times but nothing too serious to make me refactor my code. But here goes

I started my game on a different system, with a low end processor about 1/4 the power of mine( fair assumption). The game crashed in loading screen. Okay lets do that again. Finally starts and tanks are going off screen, dead tanks are not being de-spawned and ended up crashing game again. Wow okay again! Backround image didn't load, can only see black background. Again! Crashed when i used a special ability. Went on for some time and i gave up.

Prof saw the pain, he'd probably seen dis shit a million times, saw all the hard work and i got a good grade anyways. But god that was embarrassing, entire class saw that and I cringe at the thought of it.

I never looked at testing the same way again. 6

6 -

Finally, I've upgraded my processor. From single core 2.2 to ... wait for it ... dual core 2.7!!!!!!!!!!! Hooray12

-

Larry Tesler, a computer scientist who created the terms "cut," "copy," and "paste," has passed away at the age of 74 (17 Feb 2020).

In 1973, Tesler took a job at the Xerox Palo Alto Research Center (PARC) where he worked until 1980. Xerox PARC is famously known for developing the mouse-driven graphical user interface and during his time at the lab Tesler worked with Tim Mott to create a word processor called Gypsy that is best known for coining the terms "cut," "copy," and "paste".

In addition to "cut," "copy," and "paste" terminologies, Tesler was also an advocate for an approach to UI design known as modeless computing. It ensures that user actions remain consistent throughout an operating system's various functions and apps. When they've opened a word processor, for instance, users now just automatically assume that hitting any of the alphanumeric keys on their keyboard will result in that character showing up on-screen at the cursor's insertion point. But there was a time when word processors could be switched between multiple modes where typing on the keyboard would either add characters to a document or alternately allow functional commands to be entered.10 -

About two years ago I get roped into a something when someone was requesting an $8000 laptop to run an "program" that they wrote in Excel to pull data from our mainframe.

In reality they are using our normal application that interacts with the mainframe and screen scrapping it to populate several Excel spreadsheets.

So this guy kept saying that he needed the expensive laptop because he needed the extra RAM and processing power for his application. At the time we only supported 32 bit Windows 7 so even though I told him ten times that the OS wouldn't recognize more than 3.5 GB of RAM he kept saying that increasing the RAM would fix his problem. I also explained that even if we installed the 64 bit OS we didn't have approval for the 64 bit applications.

So we looked at the code and we found that rather than reusing the same workbook he was opening a new instance of a workbook during each iteration of his loop and then not closing or disposing of them. So he was running out of memory due to never disposing of anything.

Even better than all of that, he wanted a faster processor to speed up the processing, but he had about 5 seconds of thread sleeps in each loop so that the place he was screen scrapping from would have time to load. So it wouldn't matter how fast the processor was, in the end there were sleeps and waits in there hard coded to slow down the app. And the guy didn't understand that a faster processor wouldn't have made a difference.

The worst thing is a "dev" that thinks they know what they are doing but they don't have a clue.7 -

"Schrödingers CPU": When your processor randomly and for no apparent reason spins up to 100% and brings the whole system to a halt. Bringing up task manager takes forever,

and when it finally appears so you could see what process is the culprit, it quickly drops back to normal again leaving you with no clue on what happened.7 -

College be like

"Today you are going to build an ARM processor, questions ?"

"Yeah, how do we do ?"

"It's not my business" -

Anyone else heard of this cool little device? (GPD Win, an obscure Chinese computer that is like a Nintendo DS but it has an x64 processor and runs full windows 10)

18

18 -

Here are the reasons why I don't like IPv6.

Now I'll be honest, I hate IPv6 with all my heart. So I'm not supporting it until inevitably it becomes the de facto standard of the internet. In home networks on the other hand.. huehue...

The main reason why I hate it is because it looks in every way overengineered. Or rather, poorly engineered. IPv4 has 32 bits worth, which translates to about 4 billion addresses. IPv6 on the other hand has 128 bits worth of addresses.. which translates to.. some obscenely huge number that I don't even want to start translating.

That's the problem. It's too big. Anyone who's worked on the internet for any amount of time knows that the internet on this planet will likely not exceed an amount of machines equal to about 1 or 2 extra bits (8.5B and 17.1B respectively). Now of course 33 or 34 bits in total is unwieldy, it doesn't go well with electronics. From 32 you essentially have to go up to 64 straight away. That's why 64-bit processors are.. well, 64 bits. The memory grew larger than the 4GB that a 32-bit processor could support, so that's what happened.

The internet could've grown that way too. Heck it probably could've become 64 bits in total of which 34 are assigned to the internet and the remaining bits are for whatever purposes large IP consumers would like to use the remainder for.

Whoever designed IPv6 however.. nope! Let's give everyone a /64 range, and give them quite literally an IP pool far, FAR larger than the entire current internet. What's the fucking point!?

The IPv6 standard is far larger than it should've been. It should've been 64 bits instead of 128, and it should've been separated differently. What were they thinking? A bazillion colonized planets' internetworks that would join the main internet as well? Yeah that's clearly something that the internet will develop into. The internet which is effectively just a big network that everyone leases and controls a little bit of. Just like a home network but scaled up. Imagine or even just look at the engineering challenges that interplanetary communications present. That is not going to be feasible for connecting multiple planets' internets. You can engineer however you want but you can't engineer around the hard limit of light speed. Besides, are our satellites internet-connected? Well yes but try using one. And those whizz only a couple of km above sea level. The latency involved makes it barely usable. Imagine communicating to the ISS, the moon or Mars. That is not going to happen at an internet scale. Not even close. And those are only the closest celestial objects out there.

So why was IPv6 engineered with hundreds of years of development and likely at least a stage 4 civilization in mind? No idea. Future-proofing or poor engineering? I honestly don't know. But as a stage 0 or maybe stage 1 person, I don't think that I or civilization for that matter is ready for a 128-bit internet. And we aren't even close to needing so many bits.

Going back to 64-bit processors and memory. We've passed 32 bit address width about a decade ago. But even now, we're only at about twice that size on average. We're not even close to saturating 64-bit address width, and that will likely take at least a few hundred years as well. I'd say that's more than sufficient. The internet should've really become a 64-bit internet too.34 -

*When your processor is left with no power to create a process to kill the process that's consuming all your processor's processing power.3

-

Okay lets write this before i go mad...

I'm one of those guys who says "use the os which suits you the most, or you're most familiar with", and i'v always been a windows guy, didn't really have any reason to use linux, because for school stuff, or programming (java and android and c) windows was great enough...

BUT MOTHERF@CKERS at microsoft, i'v had enough...

First my handheld computer goes nuts, because windows is eating 80% of processor, and if i fix it, then some other kind of windows related thing eats up that much, and you know what? I've been okay with that, because thats only a handheld computer, but boy, didn't my main computer start to do the same?!?

I cannot do anything, basically i start something trivial up (by trivial i mean trivial, like idk, a texteditor not even a browser, or an ide or anything that would take a bit of more ram) and my computer cant do shit....

I'm so mad.... Currently installing elemantary os... F@ck this shit i'm out...

(And lets not forget the hours of 'updates' which dont do shit....)13 -

I have a laptop which I bought for the sole purpose of gaming and I bought a hell lot of games off steam to startup.

But the problem here is I have to run those games on Windows (nvidia graphics card) and I only have a primary hdd and no ssd. Even though the ram and processor is up to speed due to high I/O on disk, I am not able to get a good performance out of it. To top it off random Windows processes hogging hdd in the background.

Any suggestions on what to do?3 -

Been reviewing ALOT of client code and supplier’s lately. I just want to sit in the corner and cry.

Somewhere along the line the education system has failed a generation of software engineers.

I am an embedded c programmer, so I’m pretty low level but I have worked up and down and across the abstractions in the industry. The high level guys I think don’t make these same mistakes due to the stuff they learn in CS courses regarding OOD.. in reference how to properly architect software in a modular way.

I think it may be that too often the embedded software is written by EEs and not CEs, and due to their curriculum they lack good software architecture design.

Too often I will see huge functions with large blocks of copy pasted code with only difference being a variable name. All stuff that can be turned into tables and iterated thru so the function can be less than 20 lines long in the end which is like a 200% improvement when the function started out as 2000 lines because they decided to hard code everything and not let the code and processor do what it’s good at.

Arguments of performance are moot at this point, I’m well aware of constraints and this is not one of them that is affected.

The problem I have is the trying to take their code in and understand what’s its trying todo, and todo that you must scan up and down HUGE sections of the code, even 10k+ of line in one file because their design was not to even use multiple files!

Does their code function yes .. does it work? Yes.. the problem is readability, maintainability. Completely non existent.

I see it soo often I almost begin to second guess my self and think .. am I the crazy one here? No. And it’s not their fault, it’s the education system. They weren’t taught it so they think this is just what programmers do.. hugely mundane copy paste of words and change a little things here and there and done. NO actual software engineers architecture systems and write code in a way so they do it in the most laziest, way possible. Not how these folks do it.. it’s like all they know are if statements and switch statements and everything else is unneeded.. fuck structures and shit just hard code it all... explicitly write everything let’s not be smart about anything.

I know I’ve said it before but with covid and winning so much more buisness did to competition going under I never got around to doing my YouTube channel and web series of how I believe software should be taught across the board.. it’s more than just syntax it’s a way of thinking.. a specific way of architecting any software embedded or high level.

Anyway rant off had to get that off my chest, literally want to sit in the corner and cry this weekend at the horrible code I’m reviewing and it just constantly keeps happening. Over and over and over. The more people I bring on or acquire projects it’s like fuck me wtf is this shit!!! Take some pride in the code you write!16 -

I have a whatsapp group with my friends, none of which are techies. A while ago one of them was looking for a phone to buy, so he started looking at models, specs and all that, but got pretty confused and asked a pretty well-informed question to the group:

"Guys, what is that quad core thing?

And what is a RAM? Is it something like the processor of the phone or what? "

OK, pretty typical stuff up until this point. The guy knows nothing about this sort of things, I wouldn't criticize him or insult him or anything like that. No, that's not the problem. The problem is the person that responded to him. This... This melted my brain so much I will never forget:

"Don't worry about that, you only have to look at how many gigahertz does the processor run at. Don't worry about the number of cores or ram. The GHz are the result of the amount of ram and cores, so the more the gigahertz, the better the phone."

PD: "Also take a look at how many megapixels does the camera have if you want to take photos".

Some people just talk out of their ass and pretend like they're experts on any topic they've read about for 5 minutes on the Internet7 -

Sooo win has updated itself (in a short 6 hours) and I was wondering what eats my pocket pc's 4 gb ram, so i checked, and turns out system is eating 40-50% of it and its processor ever since the update... *Sigh* Awesome....

(Picture src: tumblr/just-shower-thoughts --> [in reality] r/showerthoughts) 7

7 -

I'm a freelance web developer and I normally work on small to medium sized websites, 9 out 10 times based on WordPress and 10 out 10 times with a limited budget.

8 out of 10 times the sites content will be updated by someone with at best casual knowledge in website management.

Say what you will about WP but it's my bread and butter and it works great for just these kinds of websites; where the cost is a dealbreaker and the end product should be as user friendly as a standard word processor.

No, you probably wouldn't build a control panel for the next space shuttle or an online bank in WordPress, but I rarely need to concern myself with those kinds of projects so that really doesn't affect me.

Pretty much the same reason I have a Kia car even though I wouldn't win a Formula 1 race with it.

I for one am grateful that there's an open source tool available to my clients that more than adequately meets their needs (that's also fun to work with and build custom solutions on for me as a developer).7 -

I was on vacation when my employer’s new fiscal year started. My manager let me take vacation because it’s not like anything critical was going to happen. Well, joke was on us because we didn’t foresee the stupidity of others…

I had to update a few product codes in the website’s web config and deploy those changes. I was only going to be logged in for 30 minutes to complete that.

I get messaged by one of our database admins. He was doing testing and was unable to complete a payment on the website. That was strange. There was a change pushed by our offsite dev agency, but that was all frontend changes (just updating text) and wouldn’t affect payments.

We don’t want to enlist the dev agency for debugging work, especially when it’s not likely that it’s a code issue. But I was on vacation and I couldn’t stay online past the time I had budgeted for. So my employer enlists the dev agency for help. It’s going to be costly because the agency is in Lithuania, it was past their business hours, and it was emergency support.

Dev agency looks at error logs. There are Apple Pay errors, but that doesn’t explain why non Apple Pay transactions aren’t going through. They roll back my deployment and theirs, but no change. They tell my employer to contact our payment processor.

My manager and the Product Manager contact Payroll, who is the stakeholder for our payment gateways. Payroll contacts our payment gateway and finds out a service called Decision Manager was recently configured for our account. Decision Manager was declining all payments. Payroll was not the person who had Decision Manager installed and our account using this service was news to her.

Payroll works with our payment processor to get payments working again. The damage is pretty severe. Online payments were down for at least 12 hours. Our call center had logged reports from customers the night before.

At our post mortem, we had to find out who ok’d Decision Manager without telling anyone. Luckily, it was quick work. The first stakeholder up was for the Fundraising Dept. She said it wasn’t her or anyone on her team. Our VP of Analytics broke it to her that our payment processor gave us the name of the person who ok’d Decision Manager and it was someone on the Fundraising team. Fundraising then starts backtracking and says that oh yes she knew about it but transactions were still working after the Decision Manager had been configured. WTAF.

Everyone is dumbfounded by this. How could you make a big change to our payment processor and not tell anyone? How did our payment processor allow you to make this change when you’re not the account admin (you’re just a user)?

Our company head had to give an awkward speech about communication and how it’s important. The web team can’t figure out issues if you don’t tell us what you did. The company head was pissed because it was a shitty way to start off the new fiscal year. Our bill for the dev agency must have been over $1000 for debugging work that wasn’t helpful.

Amazingly, no one was fired.4 -

So I'm looking to buy a drone for my internship company to find people during floods. And damn these companies suck balls.

Closed source.

You want to use API for onboard image processing?

Buy a €3500 drone

Add €1100 processor stuff

Add €850 camera

ugh.16 -

When the new iPhone has "The highest pixel density in an iPhone yet" and "Most ambitious chip ever in an iPhone", like they'd make a new iPhone with a slower processor and a worse display?6

-

Help.

I'm a hardware guy. If I do software, it's bare-metal (almost always). I need to fully understand my build system and tweak it exactly to my needs. I'm the sorta guy that needs memory alignment and bitwise operations on a daily basis. I'm always cautious about processor cycles, memory allocation, and power consumption. I think twice if I really need to use a float there and I consider exactly what cost the abstraction layers I build come at.

I had done some web design and development, but that was back in the day when you knew all the workarounds for IE 5-7 by heart and when people were disappointed there wasn't going to be a XHTML 2.0. I didn't build anything large until recently.

Since that time, a lot has happened. Web development has evolved in a way I didn't really fancy, to say the least. Client-side rendering for everything the server could easily do? Of course. Wasting precious energy on mobile devices because it works well enough? Naturally. Solving the simplest problems with a gigantic mess of dependencies you don't even bother to inspect? Well, how else are you going to handle all your sensitive data?

I was going to compare this to the Arduino culture of using modules you don't understand in code you don't understand. But then again, you don't see consumer products or customer-specific electronics powered by an Arduino (at least not that I'm aware of).

I'm just not fit for that shooting-drills-at-walls methodology for getting holes. I'm not against neither easy nor pretty-to-look-at solutions, but it just comes across as wasteful for me nowadays.

So, after my hiatus from web development, I've now been in a sort of internet platform project for a few months. I'm now directly confronted with all that you guys love and hate, frontend frameworks and Node for the backend and whatever. I deliberately didn't voice my opinion when the stack was chosen, because I didn't want to interfere with the modern ways and instead get some experience out of it (and I am).

And now, I'm slowly starting to feel like it was OKAY to work like this.7 -

First dev job: port Unix on Transputer, a (now defunct) bizarre processor with no stack, no registers and no compiler. That was fun! And that was in 1991 😎

3

3 -

Being a user, u watch your processor handle things...! 😪

Become a superuser 🤓

Processor watches you handle everything 😎7 -

Yesterday I completed a transactions module that used an external payment processor, similar to PayPal. It was hard, but after few hours of trying out different options I finally managed to get it to work.

I decided to create a simple prototype UI without any styling just to show my progress to the manager and let him know that it's working.

His response? "yeah, that seems to work, but that UI is terrible and not appealing at all. Change that immediately and try to add more thought into your design"

I guess I won't be making prototypes any time soon6 -

Ability to complaining about "Gradle is too slow in my laptop!" to my dad to get a better one.

Saying thanks to Google.3 -

We had a sprint where we removed some fields from the signup page, in order not to "scare users off" with the amount of information requested. Quite a few changes in frontend and backend alike.

Only now in the final day of the sprint (where we're supposed to deploy the changes) do we realize some of that information is actually required by the payment processor, and likely for very predictable *legal* reasons which I even questioned during planning.15 -

Had an interview with a potential customer last week, and he started questioning my technical capability in the middle of the discussion on the basis that I’m taking notes with pen and paper...

Yes, I can type. At 90+ WPM, I can darn near produce a transcript of everything we say. But I won’t remember any of it afterward, because it passes straight from the ears to the hands without any processing.

“You see, that’s what we have something called ’search’ for...”

...Yeah. Except that doesn’t help with picking out the most important points from a wall of text, organizing it in a way that allows visualizing relationships between concepts, and other non-linear things that are hard to do on the fly in a word processor.

“Well, how about we get you a tablet with a pen and you can just write on that, then?”

How about no.

Ended up turning him down because of other concerns that were raised that were, suffice to say, about as ornerous as you might expect from that exchange.7 -

One thing I learned over the years is that even when you think you can't do something or don't have the strength to do it, you actually can.

People do nothing better than to make excuses for themselves or blame others for the things they did without even considering that they could have done something about it.

The brain is a powerful processor to the point that when you think you're sick constantly your body will react accordingly.

Thing is though. If you don't take the opportunities that present themselves or don't look for them you'll probably get nowhere to the point where it could lead to depression.

Sure enough failures and mistakes happen all the time, ardly anything will go right the first time possibly leading to becoming demotivated and sometimes even depression.

Why? Because you forgot to think "what can I improve the next time"

A co-worker of mine keeps going back to his project he's working on because the boss has something in mind but somehow fails to translate it to him. He never stops to think what the desired functionality is compared to what it should do or look like (UI/UX). Eventually he snaps blaming the boss that he had to change it a couple of times.

This has happened multiple times since I started my Internship to the point where it just starts to irritate me.

Of course it's not always your fault but there are plenty of cases where it is or where you could have prevented it.

Mistakes and failures make you stronger only if you want to learn from them.

Have a good day -

I wanted an Android phone with latest Oreo version, atleast 4gb RAM, 32gb or more with good processor supporting 4G dual SIM with a resolution of 1440 pixels by 2560 pixels at US$ 100.

Well got for all of it for US$125.

I went to LineageOS site, exported and filtered all supported devices with my relevant device attributes.

Got LeEco LeMax 2 for US125$ on eBay. Installed latest LineageOS 15.1 with Android 8.1.0. got 25gb free space.

Using the phone for last 10 days, flawlessly. 22

22 -

I enjoy watching the Microsoft events, as they always introduce something completely new, that no one's really made before. Unlike certain companies *cough* Apple *cough* who just slap a better processor on their existing devices and calls it "revolutionary". I like all this innovation7

-

Amdy's story.

Amdy didn't have it easy. He's just a little APU and was already outdated when he was manufactured. But it got even worse! He didn't do anything wrong, but upon assembly, they lasered a different part number on him.

He didn't think much about it, but then they denied him all the goodies his brothers got: a nice printed box, a cooler, a leaflet, and a sticker.

Amdy didn't get any of that and wasn't welcome in the boxed camp. Instead, they stuffed him into a shoddy tray cardboard box with just some ESD foam for the pins.

Amdy was disappointed. That was just not fair! He was capable like his brothers. To add insult to injury, not even the manufacturer wanted to give warranty on the poor ugly duckling. They didn't listen to his complaints and shipped him to an unknown fate.

Then our roads crossed because Amdy was 10 EUR cheaper than the boxed ones at that point. Little Amdy breathed heavily when he finally got out of the mini box and seemed a bit disoriented. Poor little sod, what did they do to you?

Then he spotted the cooler. He had never seen anything like this before, so much better than the coolers his boxed brothers had received! And even top of the line thermal paste!

Amdy decided to be as good and fast a processor as a small Zen+ APU could possibly be. What was that software stuff? Didn't look like Windows. Ooohhh - Amdy rejoiced when he figured out that he was supposed to run Linux!

And that's how a despaired and unhappy APU finally found a life full of goodness.6 -

One of the more memorable computer problems I solved were when I added some lego blocks to solve a recurring windows bluescreen

A friend had a Pentium 3 (slot 1) that kept throwing him several bluescrens per day so I decided to help

I open up the computer and saw that the processor were not properly securred in it's place and the plastic pieces that should have holding it in place were gone, so I improvised pressing in some lego pieces that I found somewhere to secure that the processor didn't move if someone were walking close to the computer and after that he didn't have any more bluescreens than the rest of us4 -

I just discovered that my dedicated server is a tamagotchi laying around at somebody's home. While benchmarking cpu I discovered that it performed worse than my 4yr old chromebook with mediatek processor.4

-

Our university labs still use computers with 512mb ram, celeron processor for programming and networking courses. Even worse some of the mouse/keyboards/monitors not working and we occasionally have to do exam on those machines ...5

-

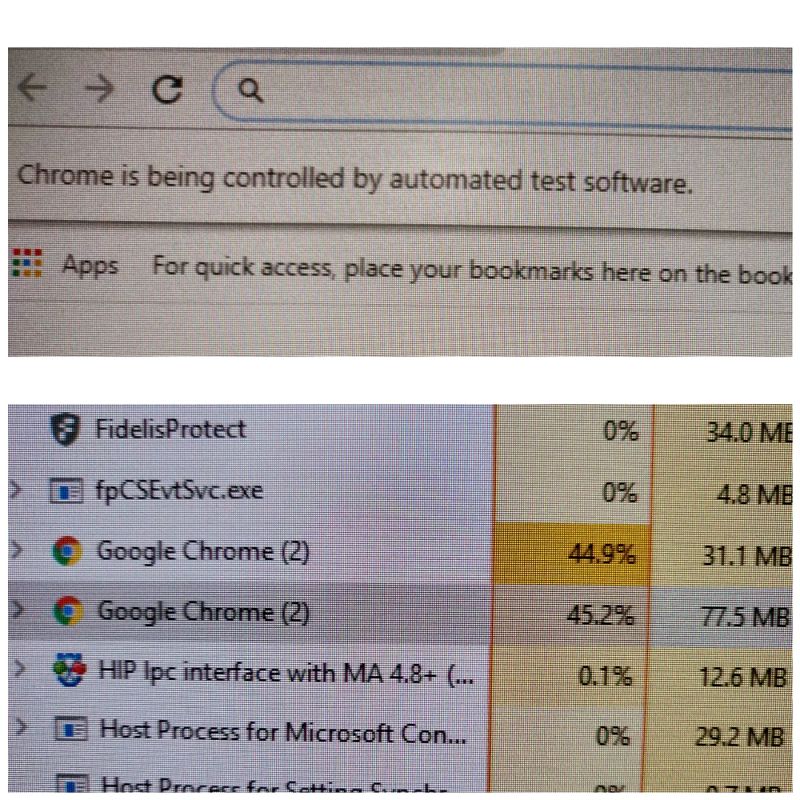

Chrome is getting its ass whooped and then crying like a whiny bitch.

Using 90.1% of CPU.

PS: I have a i7 8th gen processor. 20

20 -

Ahahaha

More of a suprise.

Just by error double clicked on WINDOWS machine on a BASH (.sh) script.

Welp, some randon bash processor appered and script was executed correctlly.

I almost shit my pants, it's a script which changes production env.

I was expecting a notepad lol9 -

MacRant: was waiting for a new macbook pro release for awhile to upgrade by old laptop (not mac). Watched the release, had very mixed feeling about it, but still ordered (clinching my teeth and saying sorry to my wallet). Next day looked into alternatives, cacelled the orded to have more time to think, now deciding... I mean cmon, no latest 7th gen processor, no 32gb memory option, 2gb video is ok for non gaming, the whole "big" thing is TouchBar that I DON'T F* NEED. They should drop the "Pro" and name it "Fancy Strip".

So I looked into alternatives, and Dell XPS 15 with maxed spect is twice as juicier, and has not a touch bar, but the whole touch freakin 4k screen, for the less price :/

Just wanted to rant about the new macbook's spec and price and see what you all think of macbook vs alternatives?16 -

So, I decided to post this based on @Morningstar's conundrum.

I'm dissatisfied with the laptop market.

Why THE FUCK should I have to buy a gaming laptop with a GTX 1070 or 1080 to get a decent amount of RAM and a fucking great processor?

I don't game. I program. I don't even own a fucking Steam library, for clarification. Never have I ever bought a game on Steam. Disproving the notion that I might have a games library out of the way, I run Linux. Antergos (Arch-based) is my daily driver.

So, in 2017 I went on a laptop hunt. I wanted something with decent specs. Ultimately ended up going with the system76 Galago Pro (which I love the form factor of, it's nice as hell and people recognize the brand for some fucking reason). Matter of fact, one of my profs wanted to know how I accessed our LMS (Blackboard) and I showed him Chromium....his mind was blown: "Ir's not just text!"

That aside, why the fuck are Dell and system76 the only ones with decent portables geared towards developers? I hate the prospect of having to buy some clunky-ass Republic of Gamers piece of shit just to have some sort of decent development machine...

This is a notice to OEMs: yall need to quit making shit hardware and gaming hardware with no mid-range compromise. Shit hardware is defined as the "It runs Excel and that's all the consumer needs" and gaming hardware is "Let's put fucking everything in there - including a decent processor, RAM, and a GTX/Radeon card."

Mid-range that is true - good hardware that handles video editing and other CPU/RAM-intensive tasks and compiling and whatnot but NOT graphics-intensive shit like gaming - is hard to come by. Dell offers my definition of "mid-range" through Sputnik's Ubuntu-powered XPS models and what have you, and system76 has a couple of models that I more or less wish I had money for but don't.

TBH I don't give two fucks about the desktop market. That's a non-issue because I can apply the logic that if you want something done right, do it yourself: I can build a desktop. But not a laptop - at least not in a feasible way.23 -

Today was a day at work that I felt like I made a significant contribution. It was not a lot of code. Actually it was a difference of 3 characters.

I am developing an industrial server so that my employer can provide access to their machines to enterprise industrial systems. You know, the big boys toys. Probably in fucking java...

Anyway, I am putting this server on an embedded system. So naturally you want to see how much serving a server can serve. In this case the device in more processor starved than memory starved. So I bumped up the speed of the serving from 1000mS to 100mS per sample. This caused the processor to jump from 8% of one core (as read from top) to 70%. Okay, 10x more sampling then 10x approx cpu usage. That is good. I know some basic metrics for a certain amount of data for a couple of different sampling rates.

Now, I realized this really was not that much activity for this processor. I mean, it didn't seem to me that it "took much" to see a large increase of processor usage. So I started wondering about another process on the system that was eating 60 to 70 % all the time. I know it updated a screen that showed some not often needed data from its display among controlling things. Most of the time it will be in a cabinet hidden from the world. I started looking at this code and figured out where the display code was being called.

This is where it gets interesting. I didn't write this code. Another really good programmer I work with wrote this. It also seemed to be pretty standard approach. It had a timer that fired an event every 50mS. This is 20 times per second. So 20 fps if you will. I thought, What would happen if I changed this to 250mS? So I did. It dropped the processor usage to 15%! WTF?! I showed another programmer: WTF?! I showed the guy who wrote it: WTF?! I asked what does it do? He said all it does it update the display. He said: Lets take to 1000mS! I was hesitant, but okay. It dropped to 5%!

What is funny is several people all said: This is running kinda hot. It really shouldn't be this hot.

Don't assume, if you have a hunch, play with it if its safe to do so. You might just shave off 55 to 60 % cpu usage on your system.

So the code I ended up changing: "50" to "1000".16 -

I am building a PC for my first time and thought about every step more than twice. This is going to be my build:

Processor: AMD Ryzen™ 7 2700

Mainboard: X370

RAM: Corsair DIMM 8 GB DDR4-2400

Video card: Zotac NVIDIA GeForce GTX 1050 Ti

SSD: Samsung 960 EVO 250 GB

HDD: Seagate ST1000DM010 1 TB

PSU: PURE POWER 10 | 300W

Case: Aerocool Cylon RGB Midi-Tower - black

What are your opinions on this build?59 -

Friend : hey! I wanna buy a laptop.. range is about entry level nothing hi fi! But it should work for 3-4 years.

Me : sure.. give me a few hours..i'll get back.

*Looks all around foe the best thing in that price range.

*Sends a list of laptops ranked based on value for money.

Friend : bought it! Yay! 😎😎😎

*Buys the shittiest laptop they could find at that price range with an absolute old age processor.

Why the fuck did you even ask me at the first place? Fucked couple of hours for me.6 -

Found this little gem in the AMD64 reference manual:

"When PCIDs are enabled the system software can store 12-bit PCIDs in CR3 for different address spaces. Subsequently, when system software switches address spaces (**by writing the page table base pointer in CR3[62:12]**), the processor **may use TLB mappings previously stored for that address space and PCID**".

later:

"Updates to the CR3 register cause the entire TLB to be invalidated except for global pages."

So let me get this straight: PCIDs allow you to reuse TLB entries (instead of flushing the entire TLB) when writing a new address space to CR3 but writing to CR3 always flushes the entire TLB anyways

Just why 🤦♂️7 -

Short story for the one interessed in the image: when we change idea we change the whole idea. And it is likely to happen very often. Sometimes twice a day, every day, for a week.

Long stort:

I am hopeless:

I am an IT university student, i know how to program and how to search for a fucking manual, but i am dealing with eletronics and PCB...

I have to make the firmware for a board (atmel things) and it have to talk via spi with some other devices (it is slave of one, and master for all the others(i will use two spi channels)), this should be easy...

I am have no senior to ask to, all i have is google and i found problems in every thing i try to do, every - fucking - single - one!!!! I know that the solution is always of the "you have to plug it in" type, but

NEITHER GOOGLE IS BEING OF HELP!

Let me explain this morning pain:

i can't add libraries in atmel studio, something wrong with the asf wizard, i have only found a tutorial that says what buttons press to solve my problem... I DO NOT HAVE THIS BUTTONS!!!

And the library i wanted to add is the one to make the board talk with the computer on his COM port... (And have some debug message...)

And the wizard gives problem because i created the project using an online atmel tool...

YES, i tried to create a project with asf and then add the files given by the online tool.... THEY DO NOT COMPILE, I SHOULD HAVE TO MESS WITH A 400 LINES LONG MAKEFILE, that is anything but human readable...

I haven't even look for anything spi related this morning

I am even forced to use windows, because every question in the forums, or every noobbish tutorial is based on it...

And then i find the tutorial with the perfect title, holy shit this is the thing i truly need!!!!! It says how to open a file. And then stops. WHAT ABOUT THE THING YOU WERE TALKING ABOUT IN THE TITLE??????

This project is the upgrade of a glue-pump based on an atmega328 (arduino uno processor), that is currently being produced and sold by our "company" .... .... That is composed by me and the boss.

He is a very nice and and smart person, he tries to give me ideas for the solution, if i cannot find out how to do something we can even change a lot of specifics of the project (the image shows our idea-change) and every board has some weeks of mornings like the one described above (i work only in the morning).

I am learning a very lot of things...

But the fact that every thins i try fails is destroying me, what would you do in my place?

Ps. Lot lf love for the ones who made it until the end <3 6

6 -

So my wife bought a really old android tablet (it's on gingerbread lol) so I've decided to bring it up to android pie, yes that means building a custom ROM, from scratch, for a 7 year old device. I will be documenting my progress and if I fail then at least it will be published research as the memory optimization in android pie is so much smoother now it should be good. If it fails I shall try to build android go to the device.

It's still got a 1.5ghz processor and 1gb of ram which should be fine so here's hoping.23 -

From Sarah Connor Chronicles, 2008: "They used to think that 12 nanometer scale was impossible. The circuits are so tiny, you're all but in the quantum realm. It's the most sophisticated processor on earth. If you could take your memories, your consciousness, everything that makes you a person, turn it into pure data, and download it onto a machine, that chip could run it."

I'm watching the DVD on a quadcore Ryzen APU that is built in 12nm, and it was already outdated when I bought it last year. I guess I better download myself to my laptop because that's a 7nm Ryzen.14 -

Running code in a JVM ... which is a virtual machine...

Inside a VM that runs Linux...

Inside a host OS that runs on native...which runs on a CISC processor... that internally runs a RISC architecture... so that makes the CISC a VM...

The RISC architecture I am pretty sure runs on Elf Magic... I am fairly certain Turing was an Elf working for Santa...

So I am really running my code on VM Elf Magic9 -

Dear brain, could u please work?

"No you motherfugging arsehole, scratch the sand out of your vagina and make yourself your own processor. Fuck u."

Seems like it's the jolly season of "my brain is uncooperative and unwilling".1 -

What a new years start..

"Kernel memory leaking Intel processor design flaw forces Linux, Windows redesign"

"Crucially, these updates to both Linux and Windows will incur a performance hit on Intel products. The effects are still being benchmarked, however we're looking at a ballpark figure of five to 30 per cent slow down"

"It is understood the bug is present in modern Intel processors produced in the past decade. It allows normal user programs – from database applications to JavaScript in web browsers – to discern to some extent the layout or contents of protected kernel memory areas."

"The fix is to separate the kernel's memory completely from user processes using what's called Kernel Page Table Isolation, or KPTI. At one point, Forcefully Unmap Complete Kernel With Interrupt Trampolines, aka FUCKWIT, was mulled by the Linux kernel team, giving you an idea of how annoying this has been for the developers."

>How can this security hole be abused?

"At worst, the hole could be abused by programs and logged-in users to read the contents of the kernel's memory."

https://theregister.co.uk/2018/01/... 22

22 -

I asked one of my engineering classmate which processor they had in their laptop.

Ans : 3GB.

I dont know whether they dont know a shit about computers or they are too bad at english.10 -

First year: intro to programming, basic data structures and algos, parallel programming, databases and a project to finish it. Homework should be kept track of via some version control. Should also be some calculus and linear algebra.

Second year:

Introduce more complex subjects such as programming paradigms, compilers and language theory, low level programming + logic design + basic processor design, logic for system verification, statistics and graph theory. Should also be a project with a company.

Year three:

Advanced algos, datastructures and algorithm analysis. Intro to Computer and data security. Optional courses in graphics programming, machine learning, compilers and automata, embedded systems etc. ends with a big project that goes in depth into a CS subject, not a regular software project in java basically.4 -

Must nearly every recently-made piece of software be terrible?

Firefox runs terribly slowly on a four-core 1.6GHz processor when given eight (8) gigabytes of RAM. Discord's user interface is awfully slow and uses unnecessary animations. Google's stuff is just falling apart; a toaster notification regarding MRO stock was recently pushed such that some markup elements of this notification were visible in the notification, the download links which are generated by Google Drive have sometimes returned error 404, and Google's software is overall sluggish and somewhat unstable. Today, an Android phone failed to update the Google Drive application... and failed to return a meaningful error message. Comprehensive manuals appear to be increasingly often not provided. Microsoft began to digest Windows after Windows XP was released.

Laziness is not virtuous.

For all computer programs, a computer program should be written such that this computer program performs well on reasonably terrible hardware... and kept simple. The UNIX philosophy is woefully underappreciated.37 -

They all want to make games like this, and minimum requirements are:

Processor: Intel Core i5 3470 @ 3.2GHZ (4 CPUs) / AMD X8 FX-8350 @ 4GHZ (8 CPUs)

Memory: 8GB.

Video Card: NVIDIA GTX 660 2GB / AMD HD7870 2GB.

Sound Card: 100% DirectX 10 compatible.

HDD Space: 65GB. 3

3 -

Well, here is another Intel CPU flaw.

I'm starting to think that all these were done on purpose...

https://thehackernews.com/2019/05/...3 -

!rant

My laptop just died for the third time.

Need to buy a new one. Not a mac.

Linux machine with 15" screen, sdd, usb c, with 8gb ram and a graphics processor.

Suggestions?29 -

Rant:

I am at work, some one says to me this system we are working on is multi threaded. I tell the no its not multi threaded and in this context. Things cannot happen concurrently. Its a single core arm 7tdmi. Arguments ensue abot the difference between multithread multitasking an multiprocessing. I proceed to explain this is a multitasking interrupt driven system. With no context switching or memory segmentation so one heap for all tasks cause thats how we have it configured and there is only one core. So there is no way the error he just described could possibly happen. Then he tells me im wrong but refuses to even look at the processor manual and rejects the Wikipedia entry for multithreading. So I plan on calling off so i can just have the next two weeks off while he trys to figure out why two things ar happening at once on this system. He deserves all the frustration that is to follow.1 -

For the first time ever, I locked up a processor while working. Take that, 24 cores!

Unrelatedly, if someone is in the office, could you please power cycle my box? ...Thanks.2 -

Because I own http://grnail.co.uk and http://hotrnail.co.uk (which I bought to prevent scammers having access to them), I often get emails about peoples' accounts. I could do a password reset and own these accounts, but of course, I don't.

However, today I started getting passport scans and personal details from Syria... 2

2 -

I got core count shamed by a client today. He has a 64 Core ryzen and I have a quad core I7.

I want to upgrade I do! But the new tech coming out this year is just too good to not wait for! Plus I waited 8 years, I can wait a few more months. Right????10 -

Things that seem "simple" but end up taking a long ass time to actually deploy into production:

1. Using a new payment processor:

"It's just a simple API, I'll be done in 2 hours"

LOL sure it is, but testing orders and setting up a sandbox or making sure you have credentials right, and then switching from test to life and retesting, and then... fuck

2. Making changes to admin stats.

"'I just have to add this column and remove that one... maybe like a couple of hours"

YOU WISH

3. Anything Javascript

"Hah, what, that's like a button, np"

125 minutes later...

console.log('before foo');

console.log(this.foo)

etc..2 -

Can someone help me understand?

I subscribed to a nifty IT-releated magazine, and on its back, there's an ad for "Dedicated root server hosting", nothing unusual at a first glance, but after I read the issue, I decided to humor them and see what it is that they offered, and... It just... Doesn't make sense to me!

An ad for "Dedicated Root Server" - What is a dedicated root server first of all? Root servers of any infrastructure sound pretty important.

But, the ad also boasts "High speed performance with the new Intel Core i9-9900K octa-core processor", that's the first weird thing.

Why would anyone responsible enough want to put an i9 into a highly-reliable root server, when the thing doesn't even support ECC? Also, come on, octa-core isn't much, I deal with servers that have anywhere between 2 and 24 cores. 8 isn't exactly a win, even if it has a higher per-core clock.

Oh, also, further down the ad has a list of, seeming, advantages/specs of the servers, they proclaim that the CPU "incl. Hyper-Threading-Technology"... Isn't that... Standard when it comes to servers? I have never seen a server without hyperthreading so far at my job.

"64 GBs of DDR4 RAM" - Fair enough, 64 gigs is a good amount, but... Again, its not ECC, something I would never put into a server.

"2 x 8 TB SATA Enterprise Hard Drive 7200 rpm" - Heh, "enterprise hard drive", another cheap marketing word, would impress me more if they mentioned an actual brand/model, but I'll bite, and say that at least the 7200 rpm is better than I expected.

"100 GBs of Backup Space" - That's... Really, really little. I've dealt with clients who's single database backup is larger than that. Especially with 2x8 TB HDD (Even accounting for software raids on top)

This one cracks me up - "Traffic unlimited"

Whaaaat?! You are not gonna give me a limit to the total transferred traffic to the internet for my server in your data center? Oh, how generous of you, only, the other case would make the server just an expensive paperweight! I thought this ad was for semi-professionals at least, so why mention traffic, and not bandwidth, the thing that matters much more when it comes to servers? How big of a bandwidth do I get? Don't tell me you use dialup for your "Dedicated Root Server"s!

"Location Germany or Finland" - Fair enough, geolocation can matter when it comes to latency.

"No minimum contract" - Oooh, how kiiiind of you, again, you are not gonna charge me extra for using the server only as long as I pay? How nice!

"Setup Fee £60" - I guess, fair enough, the server is not gonna set itself up, only...

The whole ad is for "monthly from £55.50", that's quite the large fee for setup.

Oh, and a cherry on top, the tiny print on the bottom mentions: "All prices exclude VAT and are a subject to..." blah blah blah.

Really? I thought that this sort of almost customer deceipt is present only in the common people's sphere!

I must say, there's being unimpressed, and then... There's this. Why, just... Why? Anyone understands this? Because I don't...12 -

Hey Hey!

Have a look at my latest Ubuntu theme.

Displaying CPU-Power Manager where i can overlock, take control of my processor.

Drop down Terminal with a transparacy Gnome theme.

Quite far to go for someone with limited knowledge at the moment.

Any advice and feedback is welcome! :) 9

9 -

I don't mind Apple marketing themselves as these revolutionary thinkers and innovators, because I figured most people see behind the marketing but appreciate Apple for what it is. It's a big company that makes well built products, that are efficient and give good support to those products.

But I'm sick to death of tech journalists talking about how every new feature is the death of Android. They have to be kidding themselves if they think what Apple's doing is innovating. Samsung's been designing screens for the bezelless market for a LONG time, and their technology in that is incredibly advanced (it's why if you use their iPhone x you'll be looking at a screen from Samsung!)

They finally adopted wireless charging and pretended it was brand new, but I remember when they came out with the Apple watch, marketing it like they'd broken ground when Android Wear watches had been out for a year!

I don't want people to think I hate Apple, I own a few of their products. I think they're remarkably invested in user privacy; homekit imo is one of the most forward thinking implementations of smart home technology that I've seen, and the new processor in the iPhone x is a Mammoth powerhouse. So, I'm not necessarily saying anything about that, but what I am saying is that they're iñcredible at marketing, but fanboys but are not self-aware can enough to recognize when the Designed-by-Apple hype over shadows the actual objectivity or the situation. There are articles already talking about Apple's wireless charging.

TL;DR I swear to god if an apple fanboy comes at me saying the bezelless design was Apple's innovation, I'm going to snap. I appreciate what Apple does well, but unfortunately people can't appreciate a product without needing to identify with it.6 -

Oh I have quite a few.

#1 a BASH script automating ~70% of all our team's work back in my sysadmin days. It was like a Swiss army knife. You could even do `ScriptName INC_number fix` to fix a handful of types of issues automagically! Or `ScriptName server_name healthcheck` to run HW and SW healthchecks. Or things like `ScriptName server_name hw fix` to run HW diags, discover faulty parts, schedule a maintenance timeframe, raise a change request to the appropriate DC and inform service owners by automatically chasing them for CHNG approvals. Not to mention you could `ScriptName -l "serv1 serv2 serv3 ..." doSomething` and similar shit. I am VERY proud of this util. Employee liked it as well and got me awarded. Bought a nice set of Swarowski earrings for my wife with that award :)

#2 a JAVA sort-of-lib - a ModelMapper - able to map two data structures with a single util method call. Defining datamodels like https://github.com/netikras/... (note the @ModelTransform anno) and mapping them to my DTOs like https://github.com/netikras/... .

#3 a @RestTemplate annptation processor / code generator. Basically this dummy class https://github.com/netikras/... will be a template for a REST endpoint. My anno processor will read that class at compile-time and build: a producer (a Controller with all the mappings, correct data types, etc.) and a consumer (a class with the same methods as the template, except when called these methods will actually make the required data transformations and make a REST call to the producer and return the API response object to the caller) as a .jar library. Sort of a custom swagger, just a lil different :)

I had #2 and #3 opensourced but accidentally pushed my nexus password to gitlab. Ever since my utils are a private repo :/3 -

Who are devranters?

I know many devs and very few of them run Linux as their primary OS. And I've never met a single one using Arch.

Also, hardly any use Vim as their primary IDE...or even editor.

Yet, if DevRant was my first introduction to devs I'd be down Best Buy looking for a laptop (why so many laptops here?) running Arch and Vim as my word processor.

Don't misunderstand me---I have nothing against Arch and Vim. I don't give a rat's arse about the OS on my machine as I'm mostly in apps. I'm sure Arch would be fine. And whatever floats anyone's boat is fine by me.

But where are all the devs maintaining VB6 apps using XP? Is the community inclusive enough to welcome them?

Where are the "dark matter" devs? Lurking? Speak up!

Now, it may be that, say, China and India run on Arch Linux and Vim and I have a limited perspective. If so, Wow! My eyes are opened.10 -

OK so... project I've been working on! It's a virtual processor that runs in the browser coded in JavaScript. OK so I know, I know, you must be thinking, "this is crazy!" "Why would she do this?!?!" and I understand that.

The idea of Tangible is is to see if I can get any tangible performance over JavaScript. I've posted a poorly drawn diagram below showing how tangible works.

The goal for tangible is to not use html, javascript, or CSS. Instead, you would use, say for instance, c++ and write your web page in that, then you compile it using my clang plugins and out pops your bytecode for Tangible. No more CSS, no more html, and no more javascript. Instead everything from a textbox to a video on your web page is an object, each object can be placed into a container, each container follows specific flag rules like: centerHorizontal or centerVertical.

Added to all of this you get the optimization of the llvm optimizer. 18

18 -

PayPal = GayPal

PHASE 1

1. I create my personal gaypal account

2. I use my real data

3. Try to link my debit card, denied

4. Call gaypal support via international phone number

5. Guy asks me for my full name email phone number debit card street address, all confirmed and verified

6. Finally i can add my card

PAHSE 2

7. Now the account is temporarily limited and in review, for absolutely no fucking reason, need 3 days for it to be done

8. Five (5) days later still limited i cant deposit or withdraw money

9. Call gaypal support again via phone number, burn my phone bill

10. Guy tells me to wait for 3 days and he'll resolve it

PHASE 3

11. One (1) day later (and not 3), i wake up from a yellow account to a red account where my account is now permanently limited WITHOUT ANY FUCKING REASON WHY

12. They blocked my card and forever blocked my name from using gaypal

13. I contact them on twitter to tell me what their fucking problem is and they tell me this:

"Hi there, thank you for being so patient while your conversation was being escalated to me. I understand from your messages that your PayPal account has been permanently limited, I appreciate this can be concerning. Sometimes PayPal makes the decision to end a relationship with a customer if we believe there has been a violation of our terms of service or if a customer's business or business practices pose a high risk to PayPal or the PayPal community. This type of decision isn’t something we do lightly, and I can assure you that we fully review all factors of an account before making this type of decision. While I appreciate that you don’t agree with the outcome, this is something that would have been fully reviewed and we would be unable to change it. If there are funds on your balance, they can be held for up to 180 days from when you received your most recent payment. This is to reduce the impact of any disputes or chargebacks being filed against you. After this point, you will then receive an email with more information on accessing your balance.

As you can appreciate, I would not be able to share the exact reason why the account was permanently limited as I cannot provide any account-specific information on Twitter for security reasons. Also, we may not be able to share additional information with you as our reviews are based on confidential criteria, and we have no obligation to disclose the details of our risk management or security procedures or our confidential information to you. As you can no longer use our services, I recommend researching payment processors you can use going forward. I aplogise for any inconvenience caused."

PHASE 4

14. I see they basically replied in context of "fuck you and suck my fucking dick". So I reply aggressively:

"That seems like you're a fraudulent company robbing people. The fact that you can't tell me what exactly have i broken for your terms of service, means you're hiding something, because i haven't broken anything. I have NOT violated your terms of service. Prove to me that i have. Your words and confidentially means nothing. CALL MY NUMBER and talk to me privately and explain to me what the problem is. Go 1 on 1 with the account owner and lets talk

You have no right to block my financial statements for 180 days WITHOUT A REASON. I am NOT going to wait 6 months to get my money out

Had i done something wrong or violated your terms of service, I would admit it and not bother trying to get my account back. But knowing i did nothing wrong AND STILL GOT BLOCKED, i will not back down without getting my money out or a reason what the problem is.

Do you understand?"

15. They reply:

"I regret that we're unable to provide you with the answer you're looking for with this. As no additional information can be provided on this topic, any additional questions pertaining to this issue would yield no further responses. Thank you for your time, and I wish you the best of luck in utilizing another payment processor."

16. ARE YOU FUCKING KIDDING ME? I AM BLOCKED FOR NO FUCKING REASON, THEY TOOK MY MONEY AND DONT GIVE A FUCK TO ANSWER WHY THEY DID THAT?

HOW CAN I FILE A LAWSUIT AGAINST THIS FRAUDULENT CORPORATION?12 -

One thing that’s a shocker and frankly very weird for people who have always used Android, is that iPhone doesn’t show any progress notif for anything whatsoever. Like dude.. I want things to happen in background and see progress in notif bar. But no, not in iPhone. You either wait for things to finish in foreground or do it explicitly inside the relevant app.

For example, when you want to send a big video on WhatsApp via Photos, you have to wait on the Photos screen until it’s sent otherwise it fucking fails. Like dude.. wtf?! Why can’t that happen in background?

On top of this, things that can happen in background have so limited processing power to themselves (because iPhone doesn’t like things happening in background; we have already established that though) that they just crawl until done and sometimes fail.

Another thing is that there are no fucking loading indicators. You touch something and then the guessing games starts whether you touched it correctly or not. Like dude.. I know your phone got a superfast processor but sometimes things take time to happen. You gotta give some kind of indication that things are happening ffs!

I know security and all, but dude you gotta give me something! Don’t make me suffer for little things.

Dude.. fuck you!6 -

Fuck Windows 10. Period.

An amateur shit-show of junk. If you have an i3 processor it will find a way to choke it to 80% with the bloody audiodg.exe.

I have an i7 and takes 25% CPU from Windows Graph Audio Isolation to play a YouTube video and 12-13 % when idle.

Junk spaghetti with some half-useless UI over the same settings that were available in much older Windows versions.

I hate having a decent 16 GB ram, 512 SSD and Radeon and so on laptop, for it to be disabled and abused by Windows and Chrome.15 -

Many people asked me this.

Every programming language is made of another, and because of it is the lowest level language every language is made of it. So what does assembly made of?

...

When you buy a vacuum cleaner they give you instructions to how to use it. When processor producer creates a processor they give an instruction to how to use it. Assembly programming language is nothing but an instruction that processor producer gave us.5 -

Conversation yesterday (senior dev and the mgr)..

SeniorDev: "Yea, I told Ken when using the service, pass the JSON string and serialize to their object. JSON eliminates the data contract mismatch errors they keep running into."

Mgr: "That sounds really familiar. Didn't we do this before?"

SeniorDev: "Hmmm...no. I doubt anyone has done this before."

Me: "Yea, our business tier processor handled transactions via XML. It allowed the client and server to process business objects regardless of platform. Partners using Perl,

clients using Delphi, website using .aspx, and our SQLServer broker even used it."

Mgr: "Oh yea...why did we stop using it?"

Me: "WCF. Remember, the new dev manager at the time and his team broke up the business processor into individual WCF services."

Mgr: "Boy, that was a crap fest. We're still fighting bugs from the mobile devices. Can't wait until we migrate everything to REST."

SeniorDev: "Yea, that was such a -bleep-ing joke."

Me: "You were on Jake's team at the time. You were the primary developer in the re-write process saying passing strings around wasn't the way true object-oriented developers write code.

So it's OK now because the string is in JSON format or because using a JSON string your idea?"

SeniorDev turns around in his desk and puts his headphones back on.

That's right you lying SOB...I remember exactly the level of personal attacks you spewed on me and other developers behind our backs for using XML as the message format.

Keep your fat ass in your seat and shut the hell up.3 -

WHY IS IT SO FUCKIN ABSURDLY HARD TO PUSH BITS/BYTES/ASM ONTO PROCESSOR?

I have bytes that I want ran on the processor. I should:

1. write the bytes to a file

2a. run a single command (starting virtual machine (that installed with no problems (and is somewhat usable out-of-the-box))) that would execute them, OR

2b. run a command that would image those bytes onto (bootable) persistent storage

3b. restart and boot from that storage

But nooo, that's too sensible, too straightforward. Instead I need to write those bytes as a parameter into a c function of "writebytes" or whatever, wrap that function into an actual program, compile the program with gcc, link the program with whatever, whatever the program, build the program, somehow it goes through some NASM/MASM "utilities" too, image the built files into one image, re-image them into hdd image, and WHO THE FUCK KNOWS WHAT ELSE.

I just want... an emulator? probably. something. something which out of the box works in a way that I provide file with bytes, and it just starts executing them in the same way as an empty processor starts executing stuff.

What's so fuckin hard about it? I want the iron here, and I want a byte funnel into that iron, and I want that iron to run the bytes i put into the fuckin funnel.

Fuckin millions of indirection layers. Fuck off. Give me an iron, or a sensible emulation of that iron, and give me the byte funnel, and FUCK THE FUCK AWAY AND LET ME PLAY AROUND.8 -

Just had an argument with someone who thinks (micro)python is the way to go for embedded projects. Cause a lot of engineers are terrible at using C/C++. And their argumets for optimisation and granular controll over what the processor does is not necessary.

Its utterly wrong to push technologies into areas for which they werent originally designed for. We've seen it alot with websites lately and I dont like that embedded is heading the same way!18 -

can i work in any more horrible company than this?

> got a shitty macbook air as official work laptop. i am an Android dev btw, nd fuck knows how long it took to build apps on this, but it was still okay

> after 1 year some keys started getting slow to respond but still working fine

> recently a Senior dev raised request for better laptops and somhow we all got macbook pros woth good ram/processor

> returned my old laptop, got a mail after few seconds that my laptop has liquid damage! (in retrospect , i think i knew it as my bag once got drenched in rain)

> few days later, a mail chain starts where some guy is asking for $300 approval of fixes from my boss's boss!

now fuck knows how is it going to get paid, but i cant afford it on my monthly salary.

i am already on a tight crunch as my dad recently lost his job and i am paying emis for a car loan as well as a hand fracture loan, but i am surprised that am getting notified about this.

afaik,

1. the laptop's whole value is around $350 (some corporate quote that i once saw) .

2. the laptops should be fucking insured (we ourselves are a fuckin general insurance company) as its an obvious norm in corporate equipments. i shouldn't be penalised for this

3. i was working fine with this laptop and i can still work on it if given back.

4. this can be deducted at the time of fnf or from gratuity fund that these assholes hold onto until a guy completes 5 years and take it all for themselves if he doesn't.

5 i can buy this shitty laptop back and use it as my personal device, or get it repaired for less.

i don't even claim to have damaged it, why are they putting it on me 😭😭😭8 -

Don't feed the pigeons.

A cautionary tale.

When you feed the pigeons they keep coming back. They don't stop pestering you for help, and they don't ever listen to you.

I gave my father-in-law my old laptop, and installed the latest version of Office 2016 because I'm a nice guy.

Now, every week at family dinner there's something he needs me to help him with.

Mind you, his previous computer had Windows XP and the one I gave him had Windows 7. So it was quite the texh upgrade for him.

Except one of his octagenarian siblings wrote a family recipe book, and wrote it in Word Processor. (because Old People!) Well fuck of course it has pictures, clip art, special formatting, vertical and horizontal lines. It worked fine on XP because Word Processor was supported by XP.

The following is me explaining to him over the phone why his recipe book wouldn't load into Word. I was in his house picking up 2000 rounds of ammo for my and my wife's pistols (target practice) while he was out and about.

FIL: "It's the link on the desktop. It comes up in Word on the old computer but when I tried to put it on the new computer it wouldn't work. I used a thumb drive."

Me: "Okay well I tried to..."

FIL: "I don't know why it would work in Word on one computer and not the next."

Me: "Okay, well I clicked on the link to the file on your old desktop and it opened in Word Processor, not Word."

FIL: "No it opens in Word on the old computer, but it won't open on the new one."

Me: "It opens in Word Processor on the old computer, it won't open in Word on..."

FIL: "Which computer are you sitting at? The old one is on the left." (as if I wouldn't recognize the computer I had for three years and just gave him a month ago!)

Me: "The old one."

FIL: "Okay so it should open in Word on the old computer."

Me: "It won't. It will open in..."

FIL: "I was thinking maybe it had something to do with a screen that popped up when I logged in to the new computer. Something about antivirus software?"

Me: "It will open in Word Processor on your old computer, but it isn't formatted..."

FIL: "Yeah, it's a '.-w-p-s' file so it should work in Word."

Me: "Word Processor is a different program from Word. This opens in Word Processor."

(long silence)

FIL: "So which one do I have?"

Me: "You have Word Processor on the old computer."

FIL: "So how do I get Word Processor on the new computer?"

Me: "You don't. It is defunct software, it was discontinued ten years ago. You can try to get a converter online, but there's no guarantee it'll work."

FIL: "Alright, I'll be home in a few minutes. I'll take a look then."

This was at 10pm last night, and I'd been out all day since 7:30am. He still didn't believe me that the book was written in Word Processor until I showed him the different startup screen for Word Processor, where it says "Word Processor" plain as day.

I fed the pigeon. And it looks like there's more of this to come. 3

3 -

CIA – Computer Industry Acronyms

CD-ROM: Consumer Device, Rendered Obsolete in Months

PCMCIA: People Can’t Memorize Computer Industry Acronyms

ISDN: It Still Does Nothing

SCSI: System Can’t See It

MIPS: Meaningless Indication of Processor Speed

DOS: Defunct Operating System

WINDOWS: Will Install Needless Data On Whole System

OS/2: Obsolete Soon, Too

PnP: Plug and Pray

APPLE: Arrogance Produces Profit-Losing Entity

IBM: I Blame Microsoft

MICROSOFT: Most Intelligent Customers Realize Our Software Only Fools Teenagers

COBOL: Completely Obsolete Business Oriented Language

LISP: Lots of Insipid and Stupid Parentheses

MACINTOSH: Most Applications Crash; If Not, The Operating System Hangs

AAAAA: American Association Against Acronym Abuse.

WYSIWYMGIYRRLAAGW: What You See Is What You Might Get If You’re Really Really Lucky And All Goes Well.2 -

Well, I wanna specialize in low-level software as I get older. Everyone is telling me to go out and learn a processor architecture. I'm willing to be patient, so I do what people recommend to me and I download the Intel x86_64 manual. I was excited... UNTIL I REALIZED THE MANUAL WAS 4474 PAGES LONG! Like, how am I supposed to jump into assembly, machine language, and low-level programing with a beginner's task like that? I cannot find ANY resources online to simplify the transition, and college sure ain't gonna teach me anytime soon.10

-

I want to play GTA V on full specs - but computer doesn't have enough juice. Anyone knows the name of the website from where I can download an i7 processor, 5TB of Memory, and 16GB RAM. I have already tried SourceForge. Please post the link below, if you happen to know one.3

-

Upscaling a prod database which was running on an 8 year old Dell desktop used as server. It had about 2MB of RAM and an Intel Core 2 processor...

This was the day I've learned a lot about querying the database as efficient as humanly possible.3 -

Windows makes me genuinely angry. Why is it that when I boot my computer, I am expected to wait 10+ minutes for windows to launch 5 startup applications, most of which are already patches for things that should be there to begin with, before I can even begin to use explorer to open GeForce experience because for some reason, windows said "Graphics drivers?! Who needs those?!" And threw them out the window! And then I get notifications about apps needing permissions to things, BUT IT WONT TELL ME WHICH ONE! I clicked the update driver notification 5 minutes ago and the installer literally just now opened up. This is a computer with a r3 processor and gtx970! It may not be the best, but it is by no means underpowered! Why must Halo online not have a Linux version? :(4

-

ME - me, TM - teammate

I was just recruited to the company. We're starting new project based on few modules.

ME: So this module will do X and Y, I will use good old interfaces and design based on abstractions so that stuff does not get glued too much.

TM: But why? Make good old processor with all the logic and throw objects at it.

ME: B-but unit tests, decomposition and othet stuff...

TM: *insists and forces me to agree*